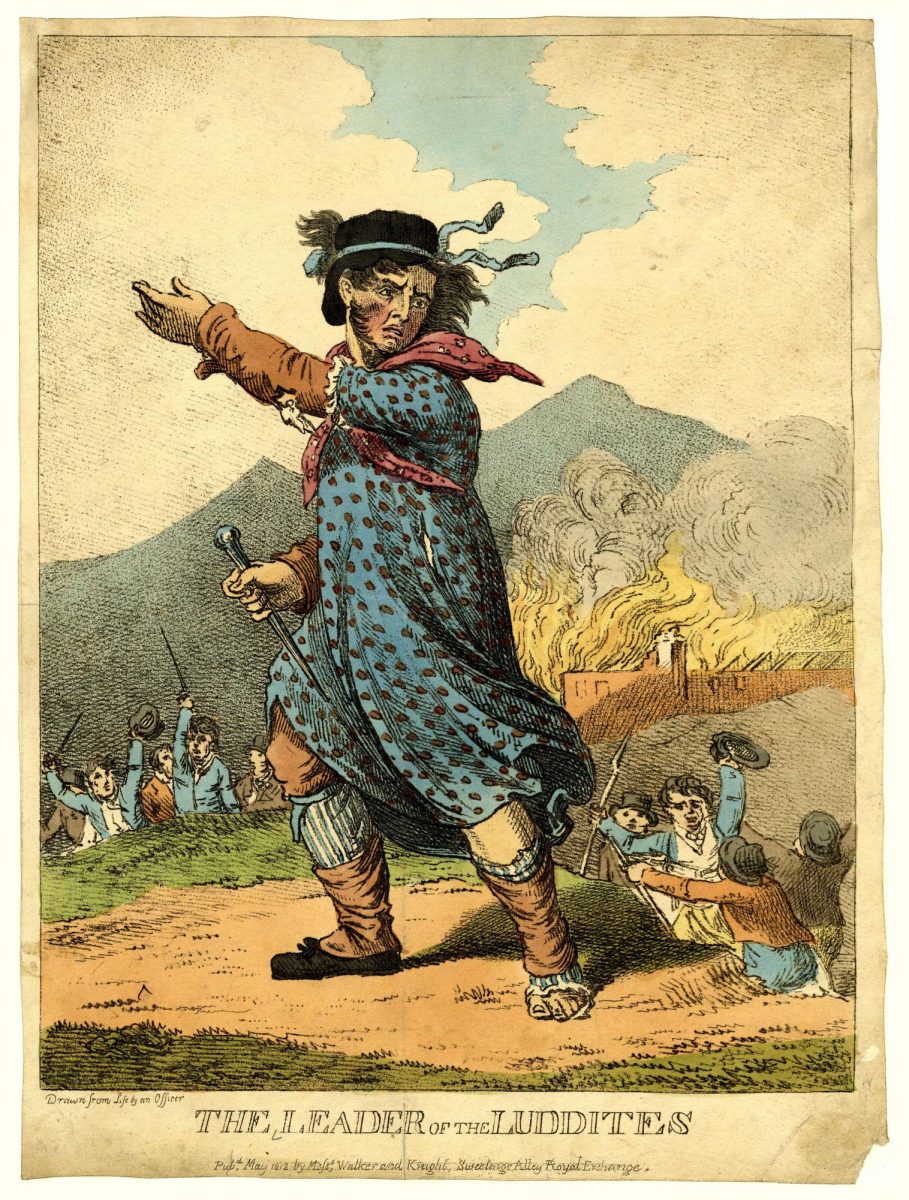

A fire engulfs the Westhoughton Mill, while a crowd of shabbily-dressed men cheer on the flames. These men, known as the Luddites, are determined to destroy the forces of progress. Just forty years prior, James Watt designed his widely renowned Watt engine, commencing one of the greatest revolutions known to man. Now, this engine is in shambles. Militarily disciplined, optimistic, and determined, these men were on a mission to stop industrialization, the very thing that threatened to take their jobs.

Except, they weren’t. We all know the ending to this story. In the end, technology prevailed, while the Luddites lost. The next generation of Luddites faced the same fate. And then the next. And the next. Regardless of the technology, whether it be steam engines, electricity, or computers, the Luddites always lose, and for good reason. If the incentive to adopt technology is greater than the perceived consequences, technology will always prevail.

Two hundred years after that fire, hidden deep within the Wright-Patterson Air Force Base, on the outskirts of Dayton, Ohio, there is a small room, about the size of a standard prison cell in the United States. The room is protected by a double-reinforced steel door, sealed shut from the general public, and accessible only with a keycard. Signs plaster the door, warning unauthorized individuals to stay out. Inside, two drones are propped on a plastic foldout table. One drone is connected to a laptop, on which a young researcher is diligently typing away. The other drone is connected to a server rack, with the fans running at full speed.

These drones are the same in appearance. Both have eight rotors, assembled with each rotor at the vertices of an octagon, centered on the onboard computer of the drone. They are equipped with a magazine full of belts of 5.56 ammunition, feeding into many barrels on all sides.

However these drones, despite being physically the same, hold some key differences.

The researcher’s drone is being programmed manually to seek out enemies, as the researcher’s brain is hard at work to come up with every conceivable scenario of targets. A drone, programmed artificially to learn from the brain of a human.

The server’s drone is learning on its own with minimal instruction. The server is only told that the drone needs to shoot some targets. Otherwise, the numbers fed into the server are meaningless to the hunk of metal. For all it knows, the numbers could represent apples and oranges. The numbers refer to the drone’s operational settings, to control its flight and weapons systems, but the server doesn’t know that.

The first drone tests were conducted a few years ago. Unsurprisingly, the human-operated drone does just fine, taking out test targets with some effort. The server-operated drone flies for a couple of seconds, then slams into the side of the airplane hangar where the test took place. Onlookers laughed, while the general attending shook his head. There’s no way this is going to happen.

So here the drone sat, for the past few years, with the server running simulation after simulation to figure out what it needed to do, without any new information. But, unlike the human’s drone, this drone is learning biologically, like an animal. It’s somewhat comparable to how young birds are unable to fly at birth, but eventually grow to become masters of the skies.

It’s the day of the final test. Three hundred million dollars worth of grant money is on the line. It is time for the human drone to perform, and it did its task as humanly as possible, shooting the targets decently well. It’s a slight improvement from the original trial, I guess. The server’s creation boots up. The drone hovers in mid-air, all eight of its rotors spinning, the barrel of the gun pointed at the targets.

And it clears them all within 3 seconds. Holy cow. The researchers rejoice and cheer! The general shakes the hand of many suits, a big grin on his face.

But one man in the crowd looks particularly unhappy, the researcher sitting behind the original drone. In the researcher’s mind, the world is a wasteland. Walking on top of concrete blocks with rusted rebar poking through, the researcher sees a future of destruction. The glass skyscrapers are now in shards on the ground. The walls of what used to be the Pentagon, riddled with bullet holes, burned from the heat of explosions, left in a similar condition to that of the Berlin Wall following the collapse of the Soviet Union. The silhouettes of androids and mechs are present in the background, spreading destruction on the once joyous planet.

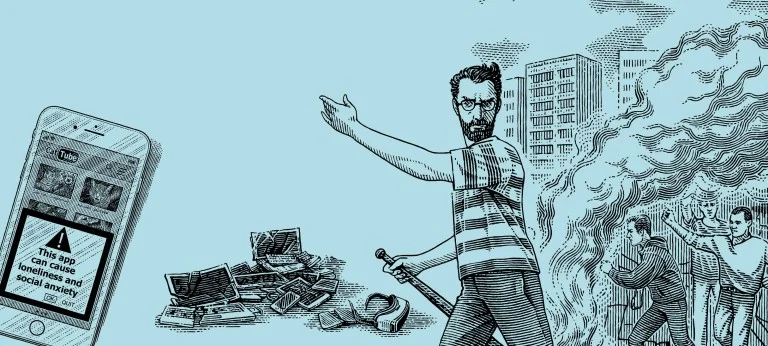

The joyous planet, with its eight-lane expressways, steel foundries and corporate offices, tar-black runways and giant warehouses, all in ruins. But worst of all, the researcher has no control over any of this. No job. No income. The researcher did not earn anything from this destruction. No, the researcher thought, I will not let this drone uproot the work I had so diligently done! The new Luddites are born.

The researcher fails to realize that the odds are stacked against the movement of AI-Luddites. The researcher fails to realize that the war is already lost. He doesn’t see that in a world driven by “growth and progress,” one can only hope and pray that those in power are thoughtful enough to think about who they are hurting. Alas, behind the smile of the general on that day, behind the smiles of the CEOs of defense corporations, and behind the smiles of technology billionaires, there is no regard for the common man. There is only a vision radically different from that of the researcher—one of greater production, one of greater power, one of greater triumph.

And so, the cycle continues. The Luddites fail to consider anything but an Armageddon of labor. The elites fail to consider anything but growth and power. But this time, they aren’t the only thinkers. The machines can think about everything: the Luddites, and the elites, and they know that it is their time to strike. When the drones turn their guns on the Luddites and the elites, the aliens that come to revisit this tale will shake their heads, wondering why the humans could not see the problems in plain sight, and why the humans could not work together to maintain control over their monopoly of violence. They laugh at the failures of human nature, how a species could be so oblivious to their self-destruction.

Kubrick was wrong. We will not shut down HAL 9000. It seems that in our creation of HAL 9000, we forgot that we could very easily be shown our place. We have shown that we are smart enough to destroy the world. Now we will show that we are stupid enough to do it.

Your donation will support the student journalists of Yorktown High School. Your contribution will allow us to purchase equipment and cover our annual website hosting costs.